Improve Data Quality with Device Person Project Strategy

Blog | August 17, 2021

Data quality isn’t something that immediately comes to mind when talking about the housing boom of the 1950s, but when it comes to online survey data collection, there’s a definite connection.

After World War II, large subdivisions of homes started to pop up outside of major cities. These cookie-cutter houses were quick to go up and easy to spot. The standardized approach ensured quality and return.

That all changed in the late 1990s. Land scarcity forced builders to stick to what they were good at – acquiring land. Instead of employing specialists (such as plumbing, HVAC, or tiling), work was subcontracted. Companies optimized margins while still producing a high frequency of houses. Quality was comprised as standards took a nosedive with heavy fragmentation of contractors. Sold by a single vendor, houses were part of the same community – yet owners’ experience could vary widely.

This may be a provocative statement, but it feels like the modern market research landscape is in a similar state.

Experts Get the Job Done

Historically, evaluating vendors and isolating quality players was simple. Selection helped optimize the quality of the online sample. But, like the housing shift, the advent of programmatic sampling upended the ability to control for data quality. Now selecting a specific vendor or panel will not guarantee that the data collected is adequate without a significant amount of tedious cleansing.

To go back to the housing example, it’s like taking possession of your new home only to find there’s still scrap material all over the place. There is no flooring, and the doorknobs are missing. You have to complete the last pieces to the house livable. If installing flooring and drilling knob holes aren’t your skillset, it’s going to be a frustrating, expensive process.

Like building a house, creating a high-quality data set requires a strategy before entering the field. It proactively leverages systems and processes that weed out issues like fraud or dupes in advance. It validates that the individual is who they claim to be and that the answers are coherent, accurate, and consumable.

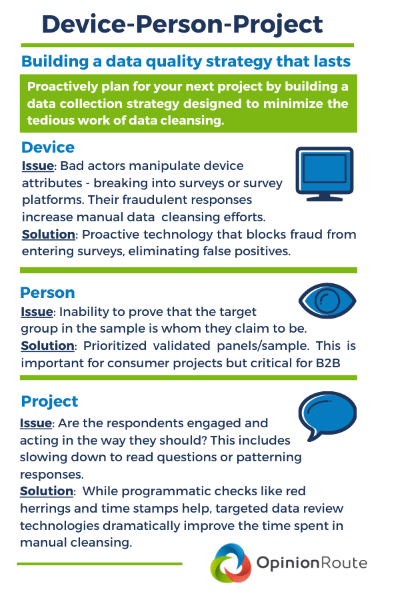

At OpinionRoute, we call this a Device Person Project strategy, and it’s the key to creating a quality data set for accurate data.

Data Quality as a Strategy

Each module in a Device Person Project strategy allows market researchers to address fraudulent behavior, validate participants, or isolate problematic verbatims. Together, Device Person Project enables researchers to field surveys that produce well-architected data sets. This eliminates the time spent on DIY tools that might eliminate bad responses but can’t get at root issues of fraud or identity.

Instead of hours trying to resolve IP addresses, looking for incoherent entries, or deploying traps to catch bots, you can leverage tools from experts in data collection strategy.

If you feel like one of our clients, who said she feels like “I’ve spent more than a year from the last five years cleaning data,” then we have a solution for you. While you were going row by row, OpinionRoute was busy perfecting technology solutions and processes that allow market researchers to focus on insights, not spending one-fifth of their available time cleansing data.

Don’t Go it Alone

To learn more about deploying a Device Person Project strategy in your next project: let’s talk. We can discuss your current approach and implement a proactive plan for your next project. By prioritizing quality data collection we can take work off your shoulders so you can shift to completing the project and delighting your client.